Phys. Based Bloom

Guest-Articles/2022/Phys.-Based-Bloom

Physically Based Bloom

"When using PBR the dynamic range is usually very high, so you don’t need

to threshold. The blurred bloom layer is set to a low value (say 0.04), which

means that only very bright pixels will bloom noticeably. That said, the whole

image will receive some softness, which can be good or bad, depending on the

artistic direction. But in general, it leads to more photorealistic results."

- Jorge Jimenez.

Prerequisites

You should have a solid grasp on HDR-rendering, tonemapping, and gamma correction before reading this article. Going through the tutorials on this website should be sufficient.

Introduction

Bloom is cool. It is one of the post-processing effects, or post FX, that are very sought-after for its nice visual feel. Yet it also has a somewhat tarnished reputation. Why is that?

I know that many gamers have made it a habit to jump into graphics settings and disable bloom when they install a new game. I have met a few of them, and besides the increased rendering times, bloom has very often been misused, which is probably one of the reasons why so many gamers are reluctant to enable it.

To understand why we want bloom, we must also understand why we might not want bloom, as that perspective is equally important in forming a well-informed opinion. Then, when we have discussed the drawbacks we will have a better understanding of how to make it right.

So without further ado...

The bloom disaster

For anyone who wishes to understand bloom or even implement it themselves, this article is an absolute must-read: Bloom disasters. This statement cannot be stressed enough. A consistent misuse of the effect through the pre-HDR gamedev era has given rise to suspicion and outright disdain. And looking at the images, it is probably not hard to understand why:

- Everything that is white or very bright just becomes a mess of light, actually hurting the eyes.

- Anything in close vicinity to bright areas is absorbed in a fiery explosion of brightness.

- Everything is just too washed-out because of excessive blur.

- The rendered images lose contrast because of an artificial threshold. Everything above a certain level is just "white", and aliasing artifacts ("jaggies") in dark areas become even more noticeable because blur is not applied to those areas.

Besides these obvious drawbacks, bloom is also very difficult to get right when applied to a thresholded color image (ie. only to pixels above a certain brightness level). And the threshold level needs a different value depending on whether or not bloom is applied on an HDR image. Furthermore, for very bright scenes we might want a higher threshold, and for darker scenes a lower threshold, as to simulate the natural adaption to changes in luminance that our eyes perform.

That being said, applying a threshold also yields problems when we are using a physically-based rendering approach, as bloom in the real world is actually applied to everything - not only bright objects! The perfect lens does not exist, so even with very high quality cameras bloom effects can still be observed, because light is scattered in the lens (and thus "bleeds out"). Our eyes are also not perfect, so all incoming light will scatter. The effect is just clearer with bright objects because they emit more photons.

Bloom done right

We live in a world where everybody in the graphics industry is using physically-based rendering models with HDR and tonemapping. So it makes sense, from a "modern" perspective, to compose a bloom effect which fits into such environments. The finer details differ, but the overall process is this:

- Scene with lighting applied is rendered into an HDR color buffer.

- To mimic how our eyes work, we do not use a color threshold, but instead downsample and blur directly on the HDR color buffer and store this result into a bloom buffer.

- We render a mix between the HDR color buffer and the bloom buffer, which is usually a linear interpolation with a strong bias (~0.04) towards the HDR color buffer.

It may seem counter-intuitive to bloom the entire scene and not only the bright objects, but since we are composing a bloom effect for an HDR pipeline, this is not a problem. With HDR we usually have a huge range between dark and bright, and because the downsampling and blurring automatically favours the brighter colors, the effect on dark areas of the scene are barely noticable. So we get everything from a good bloom effect, but it's more physically correct, and we don't have to worry about defining a good color threshold.

By reading this article, we can see that very large filter kernels such as 41x41 can be approximated quite well by applying a small filter kernel, such as 3x3, on a downsampled version of the image. This should be an order of magnitude faster since we are performing a lot fewer texture lookups. Naturally, this will depend on the resolution of the source texture, the filter kernel size, and the number of times we downsample. But in general, we will save a lot of computation!

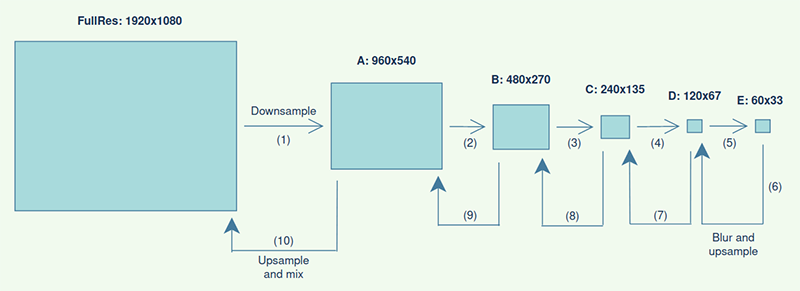

The quality of the bloom is highly dependent upon the downsampling filter, so we need to use a good one. Poorly designed filters lead to pulsating artifacts and temporal stability issues. The method that inspired this article was presented at ACM Siggraph in 2014 by Jorge Jimenez for Call of Duty: Advanced Warfare. The algorithm follows this recipe:

- Run a shader which downsamples (downscales) the HDR buffer containing per-pixel color with light and shadows applied. This shader is run a fixed number of times to continually produce a smaller image, each time with half resolution in both X and Y axes.

- We then run a small 3x3 filter kernel on each downsampled image, and progressively upsample them until we reach image A (first downsampled image).

- Finally, we mix the overall bloom contribution into the HDR source image, with a strong bias towards the HDR source.

We can go smaller if we wish, e.g. by also having a texture "F". But for this drawing that would probably look too small. Also note that we are using the word "progressively" when we upsample - we are not upsampling from E → A, D → A, etc. - instead we perform the upsampling gradually up through the image chain. This will result in a much better blur. We also do not need to worry about running the 3x3 filter kernel several times on each image, as the downsampling and upsampling will result in blur that closely resembles a gaussian filter.

Downsampling

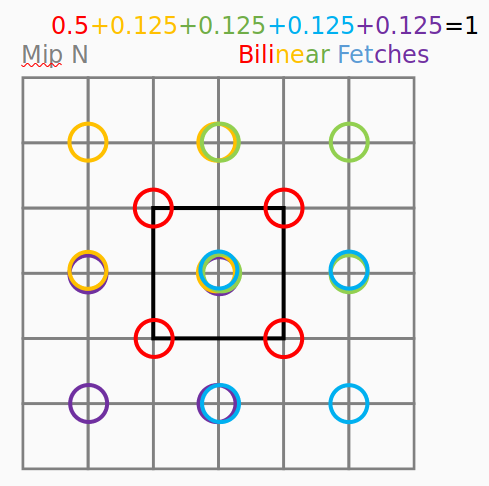

Now that we have the algorithm in place we just need to figure out which filter kernels to use. Fortunately, the people at Sledgehammer Games did the hard work for us. They present a customly designed downsample kernel that perform 13 texture lookups around the current pixel and blends the values using a weighted average. The number 13 really is the number of lookups applied with bi-linear filtering enabled, so in reality the shader performs 36 lookups. :)

The above image deserves some explanation: The lookup pattern is divided into 5 colored regions. The center pixel, which corresponds to the "current pixel", has 4 colored rings on top of each other. As shown with text, the 4 red texels should contribute to 0.5 of the final contribution, such that texels closer to the center pixel will be weighted higher. You may take a look at the slides from the 2014 presentation, which provides a little more information. The below code is the downsample fragment shader. I have added some thorough documentation (encouraged software development practice btw! :)) to help you understand how the filtering works:

#version 330 core

// This shader performs downsampling on a texture,

// as taken from Call Of Duty method, presented at ACM Siggraph 2014.

// This particular method was customly designed to eliminate

// "pulsating artifacts and temporal stability issues".

// Remember to add bilinear minification filter for this texture!

// Remember to use a floating-point texture format (for HDR)!

// Remember to use edge clamping for this texture!

uniform sampler2D srcTexture;

uniform vec2 srcResolution;

in vec2 texCoord;

layout (location = 0) out vec3 downsample;

void main()

{

vec2 srcTexelSize = 1.0 / srcResolution;

float x = srcTexelSize.x;

float y = srcTexelSize.y;

// Take 13 samples around current texel:

// a - b - c

// - j - k -

// d - e - f

// - l - m -

// g - h - i

// === ('e' is the current texel) ===

vec3 a = texture(srcTexture, vec2(texCoord.x - 2*x, texCoord.y + 2*y)).rgb;

vec3 b = texture(srcTexture, vec2(texCoord.x, texCoord.y + 2*y)).rgb;

vec3 c = texture(srcTexture, vec2(texCoord.x + 2*x, texCoord.y + 2*y)).rgb;

vec3 d = texture(srcTexture, vec2(texCoord.x - 2*x, texCoord.y)).rgb;

vec3 e = texture(srcTexture, vec2(texCoord.x, texCoord.y)).rgb;

vec3 f = texture(srcTexture, vec2(texCoord.x + 2*x, texCoord.y)).rgb;

vec3 g = texture(srcTexture, vec2(texCoord.x - 2*x, texCoord.y - 2*y)).rgb;

vec3 h = texture(srcTexture, vec2(texCoord.x, texCoord.y - 2*y)).rgb;

vec3 i = texture(srcTexture, vec2(texCoord.x + 2*x, texCoord.y - 2*y)).rgb;

vec3 j = texture(srcTexture, vec2(texCoord.x - x, texCoord.y + y)).rgb;

vec3 k = texture(srcTexture, vec2(texCoord.x + x, texCoord.y + y)).rgb;

vec3 l = texture(srcTexture, vec2(texCoord.x - x, texCoord.y - y)).rgb;

vec3 m = texture(srcTexture, vec2(texCoord.x + x, texCoord.y - y)).rgb;

// Apply weighted distribution:

// 0.5 + 0.125 + 0.125 + 0.125 + 0.125 = 1

// a,b,d,e * 0.125

// b,c,e,f * 0.125

// d,e,g,h * 0.125

// e,f,h,i * 0.125

// j,k,l,m * 0.5

// This shows 5 square areas that are being sampled. But some of them overlap,

// so to have an energy preserving downsample we need to make some adjustments.

// The weights are the distributed, so that the sum of j,k,l,m (e.g.)

// contribute 0.5 to the final color output. The code below is written

// to effectively yield this sum. We get:

// 0.125*5 + 0.03125*4 + 0.0625*4 = 1

downsample = e*0.125;

downsample += (a+c+g+i)*0.03125;

downsample += (b+d+f+h)*0.0625;

downsample += (j+k+l+m)*0.125;

}

Upsampling

The upsampling shader works on the opposite way, ie. progressively blur and upsample. From the previous figure, using the letters A-E, this is the flow:

E' = E

D' = D + blur(E', b4)

C' = C + blur(D', b3)

B' = B + blur(C', b2)

A' = A + blur(B', b1)

Fullres = mix(FullRes, A', bloomStrength)

This means that we blur as we upsample, using a 3x3 filter kernel. E.g. when we perform the second line, we have D bound as target (color attachment 0) and then we sample into E, with a 3x3 kernel around the current pixel, using b4 as a radius to spread out the lookup texel positions. An important detail is that we need a different radius for each upsample, with larger radii on larger mips. So by using a radius in texel space, the radius will automatically grow during the upsampling procedure. The upsampling fragment shader is shown here:

#version 330 core

// This shader performs upsampling on a texture,

// as taken from Call Of Duty method, presented at ACM Siggraph 2014.

// Remember to add bilinear minification filter for this texture!

// Remember to use a floating-point texture format (for HDR)!

// Remember to use edge clamping for this texture!

uniform sampler2D srcTexture;

uniform float filterRadius;

in vec2 texCoord;

layout (location = 0) out vec3 upsample;

void main()

{

// The filter kernel is applied with a radius, specified in texture

// coordinates, so that the radius will vary across mip resolutions.

float x = filterRadius;

float y = filterRadius;

// Take 9 samples around current texel:

// a - b - c

// d - e - f

// g - h - i

// === ('e' is the current texel) ===

vec3 a = texture(srcTexture, vec2(texCoord.x - x, texCoord.y + y)).rgb;

vec3 b = texture(srcTexture, vec2(texCoord.x, texCoord.y + y)).rgb;

vec3 c = texture(srcTexture, vec2(texCoord.x + x, texCoord.y + y)).rgb;

vec3 d = texture(srcTexture, vec2(texCoord.x - x, texCoord.y)).rgb;

vec3 e = texture(srcTexture, vec2(texCoord.x, texCoord.y)).rgb;

vec3 f = texture(srcTexture, vec2(texCoord.x + x, texCoord.y)).rgb;

vec3 g = texture(srcTexture, vec2(texCoord.x - x, texCoord.y - y)).rgb;

vec3 h = texture(srcTexture, vec2(texCoord.x, texCoord.y - y)).rgb;

vec3 i = texture(srcTexture, vec2(texCoord.x + x, texCoord.y - y)).rgb;

// Apply weighted distribution, by using a 3x3 tent filter:

// 1 | 1 2 1 |

// -- * | 2 4 2 |

// 16 | 1 2 1 |

upsample = e*4.0;

upsample += (b+d+f+h)*2.0;

upsample += (a+c+g+i);

upsample *= 1.0 / 16.0;

}

Both the upsampling and downsampling shaders need a vertex shader which fills out a screen-filled quad:

#version 330 core

layout (location = 0) in vec2 aPosition;

layout (location = 1) in vec2 aTexCoord;

out vec2 texCoord;

void main()

{

gl_Position = vec4(aPosition.x, aPosition.y, 0.0, 1.0);

texCoord = aTexCoord;

}

Writing the program

Now that we have the shaders and the algorithm out of the way, we need to define the CPU-side of things as well. We need 1 framebuffer for the downsamping and upsampling, create some textures to contain the mips, two shader programs, a prerendered HDR color buffer, and some geometry to render. I will not be demonstrating in this tutorial how to do the last 2 things, as I consider that trivial or out of scope :). For the bloom framebuffer I decided to go for a minimalistic object-oriented approach, but you are welcome to structure this in any way that you see fit.

struct bloomMip

{

glm::vec2 size;

glm::ivec2 intSize;

unsigned int texture;

};

class bloomFBO

{

public:

bloomFBO();

~bloomFBO();

bool Init(unsigned int windowWidth, unsigned int windowHeight, unsigned int mipChainLength);

void Destroy();

void BindForWriting();

const std::vector<bloomMip>& MipChain() const;

private:

bool mInit;

unsigned int mFBO;

std::vector<bloomMip> mMipChain;

};

The most important method here is the Init function. It creates mips from a fixed screen resolution, and a number of mips which corresponds to the letters A-E described earlier. You may go as small as you wish, though 5 or 6 is usually good enough. If you go too small, make sure that you stop when you reach 1x1 in mip size!

bool bloomFBO::Init(unsigned int windowWidth, unsigned int windowHeight, unsigned int mipChainLength)

{

if (mInit) return true;

glGenFramebuffers (1, &mFBO);

glBindFramebuffer (GL_FRAMEBUFFER, mFBO);

glm::vec2 mipSize((float)windowWidth, (float)windowHeight);

glm::ivec2 mipIntSize((int)windowWidth, (int)windowHeight);

// Safety check

if (windowWidth > (unsigned int)INT_MAX || windowHeight > (unsigned int)INT_MAX) {

std::cerr << "Window size conversion overflow - cannot build bloom FBO!\n";

return false;

}

for (unsigned int i = 0; i < mipChainLength; i++)

{

bloomMip mip;

mipSize *= 0.5f;

mipIntSize /= 2;

mip.size = mipSize;

mip.intSize = mipIntSize;

glGenTextures (1, &mip.texture);

glBindTexture (GL_TEXTURE_2D, mip.texture);

// we are downscaling an HDR color buffer, so we need a float texture format

glTexImage2D (GL_TEXTURE_2D, 0, GL_R11F_G11F_B10F,

(int)mipSize.x, (int)mipSize.y,

0, GL_RGB, GL_FLOAT, nullptr);

glTexParameter i(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameter i(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameter i(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameter i(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

mMipChain.emplace_back(mip);

}

glFramebufferTexture2D (GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0,

GL_TEXTURE_2D, mMipChain[0].texture, 0);

// setup attachments

unsigned int attachments[1] = { GL_COLOR_ATTACHMENT0 };

glDrawBuffers(1, attachments);

// check completion status

int status = glCheckFramebufferStatus (GL_FRAMEBUFFER);

if (status != GL_FRAMEBUFFER_COMPLETE)

{

printf("gbuffer FBO error, status: 0x\%x\n", status);

glBindFramebuffer (GL_FRAMEBUFFER, 0);

return false;

}

glBindFramebuffer (GL_FRAMEBUFFER, 0);

mInit = true;

return true;

}

The code should speak for itself. One thing to note is that we must enable linear filtering and edge-clamping for all mips. Since we don't need an alpha channel, we can have some extra color precision by using the internal storage format GL_R11F_G11F_B10F for the textures in the mip chain.

The other methods in the class are given below:

bloomFBO::bloomFBO() : mInit(false) {}

bloomFBO::~bloomFBO() {}

void bloomFBO::Destroy()

{

for (int i = 0; i < mMipChain.size(); i++) {

glDeleteTextures(1, &mMipChain[i].texture);

mMipChain[i].texture = 0;

}

glDeleteFramebuffers (1, &mFBO);

mFBO = 0;

mInit = false;

}

void bloomFBO::BindForWriting()

{

glBindFramebuffer (GL_FRAMEBUFFER, mFBO);

}

const std::vector<bloomMip>& bloomFBO::MipChain() const

{

return mMipChain;

}

After this, we create another class which contains a bloomFBO object and makes a call to it every frame. I chose this design because the framebuffer and the shaders are two distinct concerns. The code is given below:

class BloomRenderer

{

public:

BloomRenderer();

~BloomRenderer();

bool Init(unsigned int windowWidth, unsigned int windowHeight);

void Destroy();

void RenderBloomTexture(unsigned int srcTexture, float filterRadius);

unsigned int BloomTexture();

private:

void RenderDownsamples(unsigned int srcTexture);

void RenderUpsamples(float filterRadius);

bool mInit;

bloomFBO mFBO;

glm::ivec2 mSrcViewportSize;

glm::vec2 mSrcViewportSizeFloat;

Shader* mDownsampleShader;

Shader* mUpsampleShader;

};

Again, we start with the Init function. This should initialize the data member that contains the framebuffer and load the shader programs:

bool BloomRenderer::Init(unsigned int windowWidth, unsigned int windowHeight)

{

if (mInit) return true;

mSrcViewportSize = glm::ivec2(windowWidth, windowHeight);

mSrcViewportSizeFloat = glm::vec2((float)windowWidth, (float)windowHeight);

// Framebuffer

const unsigned int num_bloom_mips = 5; // Experiment with this value

bool status = mFBO.Init(windowWidth, windowHeight, num_bloom_mips);

if (!status) {

std::cerr << "Failed to initialize bloom FBO - cannot create bloom renderer!\n";

return false;

}

// Shaders

mDownsampleShader = new Shader("downsample.vs", "downsample.fs");

mUpsampleShader = new Shader("upsample.vs", "upsample.fs");

// Downsample

mDownsampleShader->use();

mDownsampleShader->setInt("srcTexture", 0);

mDownsampleShader->Deactivate();

// Upsample

mUpsampleShader->use();

mUpsampleShader->setInt("srcTexture", 0);

mUpsampleShader->Deactivate();

mInit = true;

return true;

}

And the rest of the public methods:

BloomRenderer::BloomRenderer() : mInit(false) {}

BloomRenderer::~BloomRenderer() {}

void BloomRenderer::Destroy()

{

mFBO.Destroy();

delete mDownsampleShader;

delete mUpsampleShader;

mInit = false;

}

void BloomRenderer::RenderBloomTexture(unsigned int srcTexture, float filterRadius)

{

mFBO.BindForWriting();

this->RenderDownsamples(srcTexture);

this->RenderUpsamples(filterRadius);

glBindFramebuffer (GL_FRAMEBUFFER, 0);

// Restore viewport

glViewport (0, 0, mSrcViewportSize.x, mSrcViewportSize.y);

}

GLuint BloomRenderer::BloomTexture()

{

return mFBO.MipChain()[0].texture;

}

The Destroy method is for proper cleanup. The BloomTexture is for retrieving the texture handle for the bloom texture after bloom has been performed - this could e.g. be a sampler2D input for your tonemapping shader. The RenderBloomTexture performs all the action: It binds the bloom framebuffer, then renders the downsamples from the input srcTexture which contains your HDR color buffer, then progressively upsamples. Finally, it restores the viewport size and binds the default framebuffer.

The only thing that we are missing now is the two private methods, which renders downsamples and upsamples. I have done my best to add some comments explaining what is happening:

void BloomRenderer::RenderDownsamples(unsigned int srcTexture)

{

const std::vector<bloomMip>& mipChain = mFBO.MipChain();

mDownsampleShader->use();

mDownsampleShader->setVec2("srcResolution", mSrcViewportSizeFloat);

// Bind srcTexture (HDR color buffer) as initial texture input

glActiveTexture (GL_TEXTURE0);

glBindTexture (GL_TEXTURE_2D, srcTexture);

// Progressively downsample through the mip chain

for (int i = 0; i < mipChain.size(); i++)

{

const bloomMip& mip = mipChain[i];

glViewport (0, 0, mip.size.x, mip.size.y);

glFramebufferTexture2D (GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0,

GL_TEXTURE_2D, mip.texture, 0);

// Render screen-filled quad of resolution of current mip

glBindVertexArray (quadVAO);

glDrawArrays (GL_TRIANGLES, 0, 6);

glBindVertexArray (0);

// Set current mip resolution as srcResolution for next iteration

mDownsampleShader->setVec2("srcResolution", mip.size);

// Set current mip as texture input for next iteration

glBindTexture (GL_TEXTURE_2D, mip.texture);

}

mDownsampleShader->Deactivate();

}

void BloomRenderer::RenderUpsamples(float filterRadius)

{

const std::vector<bloomMip>& mipChain = mFBO.MipChain();

mUpsampleShader->use();

mUpsampleShader->setFloat("filterRadius", filterRadius);

// Enable additive blending

glEnable (GL_BLEND);

glBlendFunc (GL_ONE, GL_ONE);

glBlendEquation (GL_FUNC_ADD);

for (int i = mipChain.size() - 1; i > 0; i--)

{

const bloomMip& mip = mipChain[i];

const bloomMip& nextMip = mipChain[i-1];

// Bind viewport and texture from where to read

glActiveTexture (GL_TEXTURE0);

glBindTexture (GL_TEXTURE_2D, mip.texture);

// Set framebuffer render target (we write to this texture)

glViewport (0, 0, nextMip.size.x, nextMip.size.y);

glFramebufferTexture2D (GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0,

GL_TEXTURE_2D, nextMip.texture, 0);

// Render screen-filled quad of resolution of current mip

glBindVertexArray (quadVAO);

glDrawArrays (GL_TRIANGLES, 0, 6);

glBindVertexArray (0);

}

// Disable additive blending

//glBlendFunc (GL_ONE, GL_ONE_MINUS_SRC_ALPHA); // Restore if this was default

glDisable(GL_BLEND);

mUpsampleShader->Deactivate();

}

For both methods we're relying on quadVAO, which is created before-hand (code snippet below this paragraph). During the downsampling we fetch the mip chain, activate the shader, and then run a loop through the mip chain where we perform the downsampling. For each iteration we must change some parameters, including handle for the input texture and the target framebuffer color attachment. We also set the viewport to the size of the target attachment so that the OpenGL rasterizer will create the correct number of fragments for us.

The upsampling is very similar, except it does things in opposite order: It starts from the low-resolution mips and then works backwards through the mip chain. We start by fetching the mip chain and activate the shader. For the filter radius you are free to experiment, but I recommend a small value like 0.005f. Then we enable additive blending so that we may perform the D' = D + blur(E', b4) operation described earlier. By using additive blending, D' is equal to D, and so we don't need to make a copy of D. In this line, b4 is the filter radius given in texture coordinates (and not in pixels!). Note the values mip and nextMip - we write from mip into nextMip, which for the first iteration means that mip is the smallest texture in the mip chain, and nextMip is the second-smallest. Again, we render to a screen-filled quad with the correct viewport size. After we're done, we disable additive blending and deactivate the shader.

I provide here the code to setup the quadVAO, in case you need it:

float quadVertices[] = {

// upper-left triangle

-1.0f, -1.0f, 0.0f, 0.0f, // position, texcoord

-1.0f, 1.0f, 0.0f, 1.0f,

1.0f, 1.0f, 1.0f, 1.0f,

// lower-right triangle

-1.0f, -1.0f, 0.0f, 0.0f,

1.0f, 1.0f, 1.0f, 1.0f,

1.0f, -1.0f, 1.0f, 0.0f

};

unsigned int quadVBO, quadVAO;

void configureQuad() {

glGenVertexArrays (1, &quadVAO);

glGenBuffers (1, &quadVBO);

glBindVertexArray (quadVAO);

glBindBuffer (GL_ARRAY_BUFFER, quadVBO);

glBufferData (GL_ARRAY_BUFFER, sizeof(quadVertices), quadVertices, GL_STATIC_DRAW);

// position attribute

glVertexAttribPointer (0, 2, GL_FLOAT, GL_FALSE, 4 * sizeof(float), (void*)0);

glEnable VertexAttribArrayglVertexAttribPointer (1, 2, GL_FLOAT, GL_FALSE, 4 * sizeof(float),

(void*)(2 * sizeof(float)));

glEnable VertexAttribArrayglBindVertexArray (0);

}

I'm just using the VAO as a global variable, because I need the quad data in several places. You are free to do something better. :)

Results

We have been going through the important parts, and you should now have an overview of how to integrate this technique into your own applications. Intentionally, I did not touch upon several steps such as what tonemapping operator to use, how to set up the HDR color buffer, or how to render geometry. These would be out of scope for this tutorial. Besides, if you visit some of the other wonderful tutorials on this website, e.g. model loading and light casters, you should be well covered.

Hopefully, the descriptions here should be sufficient to help you implement this yourself, and otherwise you may have a look at the full source code. I also have a small video on Youtube demonstrating the bloom effect with various tonemapping operators, link here. The video clip below shows the reference code in action, where the keyboard keys 1-3 switch between no bloom, the "old" bloom implementation, and the technique described in this tutorial. The results really speak for themselves, I think. :)

Conclusion & Discussion

We have been looking at how to implement bloom without threshold, including progressively downsampling and upsampling an HDR color buffer, applying a small 3x3 filter kernel on each mip, and how to mix in the bloom in the final render. I hope you found this interesting and that you learned something.

After seeing the difference in visual quality between thresholded/un-thresholded bloom, it is my conviction that bloom is a technique that has been misunderstood. Bear in mind that this is purely an opinion, as many games and game developers have successfully applied bloom with threshold. But when looking at high-quality camera lenses, and at the adaption to color that our eyes perform, it makes sense to have a bloom without threshold in an effort to imitate the physics of the real world. Bloom should not be about making bright things blurry, but rather to make light bleed out on the camera lens (ie. the screen buffer). And since we have light everywhere, even in very dark scenes, this approach especially makes sense. In this regard, bloom should also be used sparingly. Bloom is probably best when gamers don't notice that it is there, simply because it looks so natural. Naturally, the strength of the bloom may be adapted to various scenes, lighting conditions, and visual styles, but a very small value in the range (0.03, 0.15) works really well.

Bonus material 0: Graphical debugging

So while the bloom effect was working nicely, I was observing some unwanted black boxes in a test application I was working on. Below image shows some black boxes that I definitely did not want in my render:

So I opened Renderdoc (highly recommended software btw!) and took a snapshot of the scene. The images below show the result of the first bloom downsampling, and the same image "normalized" so I can see more clearly the difference between very dark colors (built-in feature of Renderdoc). The second picture is obtained by setting the white-point of the image to a very low value (0.00526):

It's not really clear from this picture alone what is wrong, but let's try to zoom in a bit, which I can do by simply showing some of the later downsamples:

Now it's clear what is going on. As the first downsample yields some pixels that are exactly 0 (completely black), then any multiple involving these pixels will also be black! This effect is then enlarged as the image is downsampled multiple times.

The fix for this problem is really simple (or rather, simple when you understand the problem). We need to clamp the result of the first downsample to some small value greater than 0, so we avoid any multiplications that may yield 0. This is the small fix in the downsample fragment shader:

void main()

{

// [...]

downsample = max(downsample, 0.0001f);

}

This completely removes the black boxes. So now you know what to do, should you experience this annoyance yourselves :). It is also a great demonstration of the actual blur radius obtained with this technique. A really large blur radius, in pixel coordinates, may be obtained simply by downsampling to an even smaller texture. Instead of 20-30 horizontal and vertical blurs, we can actually obtain a more stable bloom effect with larger radii, by downsampling to very small textures. Theoretically, we may go as small as we like, as long as the smallest texture is 1x1 pixels. In contrast to the "old" bloom tutorial on this website, the blur radius here is not fixated by the blur kernel size, so even a very small light source may blur the entire screen if we like.

If you are curious about learning how to use Renderdoc, János Turánszki has a great demonstration for his Wicked Engine devlog [8].

Bonus material 1: Soft threshold

If you really must, it is quite possible to add a threshold to the bloom effect, which softly fades in the threshold instead of having a hard cut. The article in [5] discusses how to do this for Unity, but you should be able to implement it in the fragment shader during the first downsample iteration (from FullRes to A). It will not lead to photo-realistic results, but it may better follow your art direction.

Bonus material 2: Karis average

If you download the slides found at [1], then at slide 166 there's a video clip showing the fireflies effect, which are a result of overly bright subpixels. This can happen with HDR-rendering, and as the slides suggest the solution is to either add multisampling to the downsampling (not universally available) or to apply a Karis average to the first downsampling. The slides describe what a Karis average is and where it was published, if you want to know more. Here, I will simply show a solution:

- For each group of 4 pixels (described in figure 3), compute the Luma value.

- Calculate the Karis average from the Luma values and divide by 4.

- Multiply with the weights.

Luma is equal to how we would compute Luminance from RGB, but Luma is computed from sRGB. The below code shows the procedure:

vec3 PowVec3(vec3 v, float p)

{

return vec3(pow(v.x, p), pow(v.y, p), pow(v.z, p));

}

const float invGamma = 1.0 / 2.2;

vec3 ToSRGB(vec3 v) { return PowVec3(v, invGamma); }

float RGBToLuminance(vec3 col)

{

return dot(col, vec3(0.2126f, 0.7152f, 0.0722f));

}

float KarisAverage(vec3 col)

{

// Formula is 1 / (1 + luma)

float luma = RGBToLuminance(ToSRGB(col)) * 0.25f;

return 1.0f / (1.0f + luma);

}

Luminance measures the brightness of a pixel, and has the per-channel weights as shown in the RGBToLuminance function, because we perceive green much more bright than the other primaries. I multiply Luma with 0.25f because the input color is a sum of 4 pixel colors (how I designed this shader). We then multiply with the weights by slightly modifying our downsample shader:

// Check if we need to perform Karis average on each block of 4 samples

vec3 groups[5];

switch (mipLevel)

{

case 0:

// We are writing to mip 0, so we need to apply Karis average to each block

// of 4 samples to prevent fireflies (very bright subpixels, leads to pulsating

// artifacts).

groups[0] = (a+b+d+e) * (0.125f/4.0f);

groups[1] = (b+c+e+f) * (0.125f/4.0f);

groups[2] = (d+e+g+h) * (0.125f/4.0f);

groups[3] = (e+f+h+i) * (0.125f/4.0f);

groups[4] = (j+k+l+m) * (0.5f/4.0f);

groups[0] *= KarisAverage(groups[0]);

groups[1] *= KarisAverage(groups[1]);

groups[2] *= KarisAverage(groups[2]);

groups[3] *= KarisAverage(groups[3]);

groups[4] *= KarisAverage(groups[4]);

downsample = groups[0]+groups[1]+groups[2]+groups[3]+groups[4];

break;

default:

downsample = e*0.125;

downsample += (a+c+g+i)*0.03125;

downsample += (b+d+f+h)*0.0625;

downsample += (j+k+l+m)*0.125;

break;

}

The variable mipLevel is a uniform int, set to 0 for the first downsampling and non-zero for the others. If you notice fireflies in your renders then you should probably add a Karis average to the first bloom downsampling. Quite clearly it increases the rendering time, but modern GPUs should be able to handle that. Also, since adding Karis average will reduce the dynamic range for the downsampling, the bloom effect will look slightly weaker (and darker as well). You can, as a compromise, increase the bloom strength slightly to compensate for this.

Bonus material 3: Lens dirt

Another extension commonly attached to bloom is lens dirt. Where bloom aims to model the scattering of light in the lens, lens dirt is an overlay which controls the bloom effect in certain fixed areas of the lens. As you can imagine, this term is used quite loosely, and while there is no clear definition as to how lens dirt should be used, the most common usage is probably as an additive layer on top of the bloom. If you look at the video clip in [6], you will see a layer mostly visible in bright areas of the screen. Thus, my immediate motivation was to try to imitate this effect, by slightly modifying how we add the bloom contribution. I used this image (downloaded from [7]), which conveniently is mostly black or white. We want its bright areas to accentuate the bloom, and its dark areas to not make any changes to the rendered images (This is purely my own interpretation!).

We add a uniform variable to control the intensity of the lens dirt, a 2D sampler for its image, and then mix this into the bloom contribution of the shader which processes the bloom effect - in my case this is the tonemapping shader, but your setup may vary. This is a sketchout of the fragment shader:

#version 330 core

in vec2 texcoord;

out vec4 color;

uniform sampler2D hdrTexture;

uniform sampler2D bloomTexture;

uniform sampler2D dirtMaskTexture;

uniform float bloomStrength = 0.04f;

uniform float dirtMaskIntensity = 20.0f;

vec3 computeBloomMix()

{

vec3 hdr = texture(hdrTexture, texcoord).rgb;

vec3 blm = texture(bloomTexture, texcoord).rgb;

vec3 drt = texture(dirtMaskTexture, vec2(texcoord.x, 1.0f - texcoord.y)).rgb * dirtMaskIntensity;

vec3 col = mix(hdr, blm + blm*drt, vec3(bloomStrength));

return col;

}

void main()

{

vec3 col = computeBloomMix();

col = tonemap(col);

color = vec4(ToSRGB(col), 1.0f);

}

You may or may not want to invert the lens dirt image around the Y-axis, depending on how you load the image. By using blm + blm*drt we can achieve a multiplication to the bloom effect which is somewhat linearly scaled between 0 and 1 depending on the lens dirt texel. Whatever this results in, we add on top of the bloom before performing linear interpolation between the HDR color buffer and the bloom contribution. There are probably many other ways of using lens dirt, so please comment and share images in the comments if you discover some cool effects! :) Below you can see the same render with/without lens dirt (intensity = 34.451f):

Notice how the lens dirt is visible on the entire screen, and not only at the bright areas. This is because there is bloom everywhere. If we were using thresholded bloom this would not work, and we would have to blend in the lens dirt in some other way.

The effect is exaggerated for the sake of visibility, but should probably be used with more subtlety in a game. It adds a lot of realism and immersion, especially for action games or desert environments. Feel tree to experiment!

References

- [1] Next Generation Post Processing in Call of Duty Advanced Warfare, ACM Siggraph '14, blog post

- [2] The Quisotic Engineer: Bloom Disasters, blog post

- [3] Interesting discussion about bloom: gamedev.net

- [4] Kalogirou: How to do good bloom for HDR rendering, web.archive.net

- [5] Jasper Flick: Bloom Unity tutorial series

- [6] Nenad M.: Syndicate - custome shaders, lens dirt, lens flare, tonemap..., Youtube

- [7] Bloom, docs.unrealengine.com

- [8] János Turánszki: Wicked Engine - Render Breakdown, Youtube